As technology advances, scientists from around the globe have been investigating the use of AI to help recognize animal pain signals.

Through computerized facial expression analysis, this AI technology can quickly and accurately recognize pain signals in animals. In some cases, AI is better at this task than some humans!

This AI technology has been used in animals from sheep to horses to cats.

An example includes the Intellipig System developed by scientists at the University of the West of England Bristol (UWE) and Scotland’s Rural College (SRUC).

Intellipig examines photos of pigs’ faces and notifies farmers if there are signs of pain, sickness, or emotional distress.

Facial Expressions in Animals

Like humans, animals convey how they’re feeling through their facial expressions. In fact, humans share 38% of our facial movements with dogs, 34% with cats and 47% with primates and horses.

But, as an article in Science points out, “the anatomical similarities don’t mean we can read animals’ faces like those of fellow humans. So, researchers studying animal communication often infer what an animal is experiencing through context”.

An example of this is pain; researchers studying animals can induce mild discomfort or be cognizant of pain signals after an invasive procedure such as castration.

After spending countless hours observing the faces of animals in painful or stressful situations, scientists can then compare them against animals who are pain or stress-free.

As a result, scientists developed “grimace scales” which provide a measure of how much pain or stress an animal is experiencing based on movement of its facial muscles.

In addition, like the Facial Action Coding System (FACS) used on humans, experts have also become skilled at coding facial movements in animals (AnimalFACS).

Amazingly at present, the FACS system has been adapted into 8 different species and their manuals are freely accessible through the animalfacs.com website:

- ChimpFACS: common chimpanzees

- MaqFACE: rhesus macaques

- GibbonFACS: hylobatid species

- OrangFACS: orangutans

- DogFACS: domestic dogs

- CatFACS: cats

- EquiFACS: domestic horses

- CalliFACS: marmoset species

However, coding work is incredibly tedious, and human coders need 2 to 3 hours to code 30 seconds of video.

This is where AI comes in.

AI can do the same task almost instantaneously, but first it must be taught.

Teaching AI to Read Animal Faces

At the University of Haifa, scientists Anna Zamansky and her team have been using AI to pinpoint the subtle signs of discomfort in animals’ faces.

There are many steps in teaching AI to read animal faces.

These steps include:

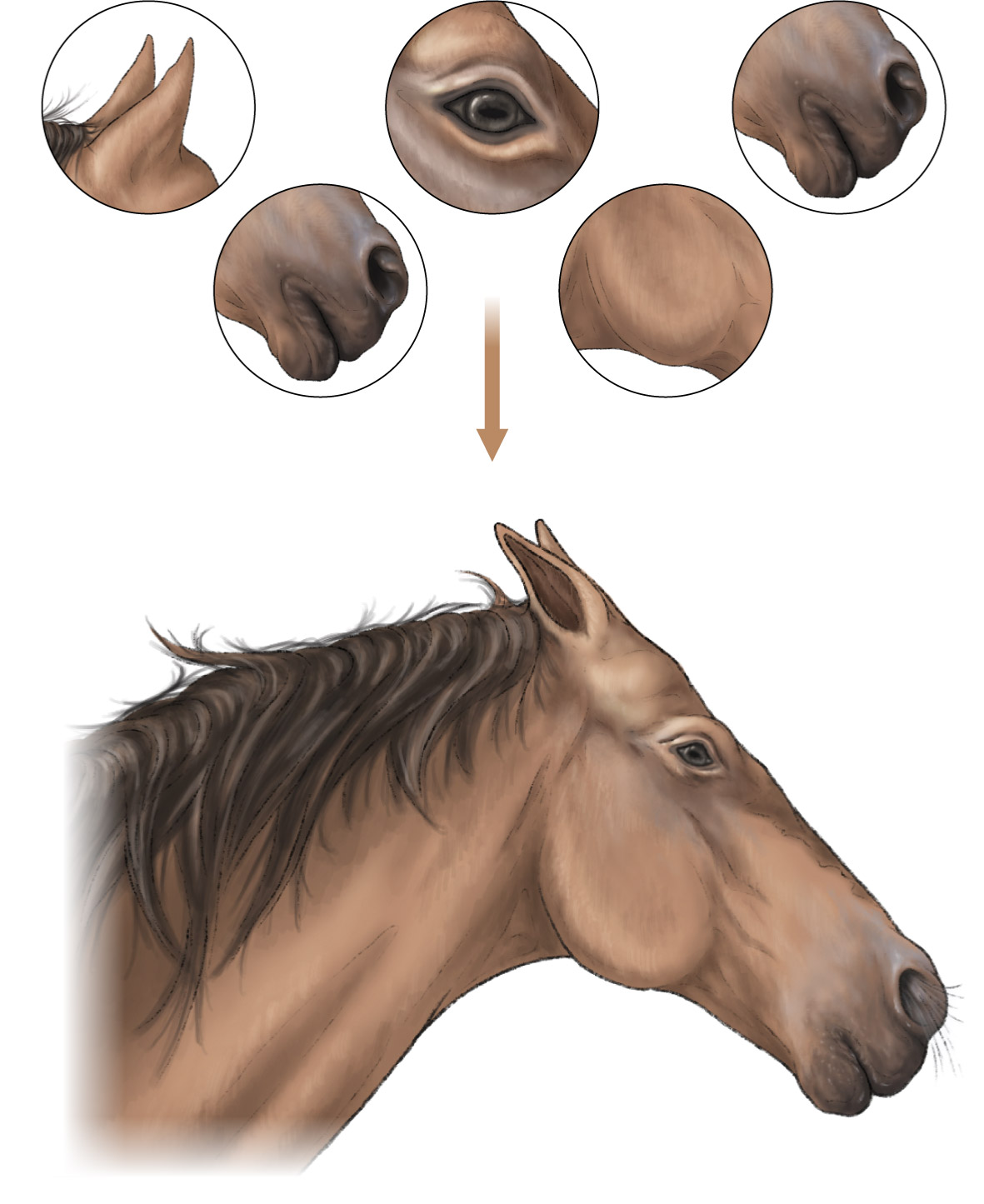

- AI learning to ID parts of the face crucial to creating expressions (this is done by manually flagging important parts of the face associated with specific muscle movements).

- Feeding AI a plethora of landmarked photos to teach it to find landmarks on its own.

- AI identifying specific facial expressions by analyzing distances between landmarks.

- Cross referencing expressions against grimace scales to determine signs of pain or distress.

Zamansky’s team trained their AI on photos of Labrador retrievers who were either eagerly anticipating a treat or were able to see the treat but were prevented from reaching it.

Their AI was able to successfully detect whether the dog was happy or frustrated 89% of the time.

The AI also successfully differentiated happy and frustrated horses in the same experiment.

Despite some limitations to their technology, Zamansky’s team is about to release an AI based app that will allow cat owners to scan their pets’ faces for 30 seconds and get easy to read messages.

The technology also extends to horses- researchers in the Netherlands have developed a similar app that scans resting horses’ faces and bodies to estimate their pain levels.

This app could potentially be used in equestrian competitions to improve animal welfare and fairness in the sport.

The post Can Artificial Intelligence (AI) Read Animal Emotions? first appeared on Humintell | Master the Art of Reading Body Language.

Guest Blog by AnnMarie Baines

Guest Blog by AnnMarie Baines