When understanding how other cultures express emotions, it is almost as important to reflect on our own cultural norms as it is to recognize differing ones.

When understanding how other cultures express emotions, it is almost as important to reflect on our own cultural norms as it is to recognize differing ones.

This is essentially what Humintell’s Dr. David Matsumoto and his team find in a recent publication. Dr. Matsumoto studied the role that one’s own cultural norms and sense of emotional regulation have in evaluating the expressions of other people. Excitingly, they found a close link between our cultural norms of emotional displays and our own sense of emotional regulation, as they relate to evaluations of other people’s emotional states.

Their study sought to address the challenges in recognizing the often muted expressions of those from more subdued emotional cultures, but it also hoped to disentangle the perceiver’s own expectations and judgments from their evaluations.

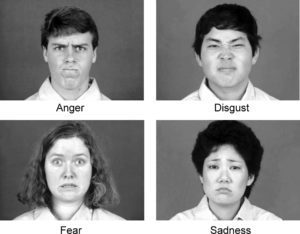

In order to accomplish these aims, Dr. Matsumoto and his team conducted two studies. Both of these asking participants to identify the expression displayed in a series of images of faces, in addition to rating the intensity of the expression. Notably, the judges were split between English speakers raised in the United States and native-born Japanese participants, and the pictures included both American and Japanese faces.

In the first of these studies, judges were also asked to report their own emotional state’s intensity while judging images of faces, and they completed a measure intended to capture “cultural display rules” or the extent to which a culture encourages intense emotional expressions.

They found that cultural differences accounted for significant variations in how the judges evaluated the intensity of expressions, with Japanese judges tending to infer that an expression showcased more emotion than American judges.

The second study built on this work by replicating the same experiment only this time asking judges to evaluate their own emotional responsiveness. Dr. Matsumoto connects this to cultural display rules, because both have to do with the “management and modification of emotional expressions and reactions.”

After being shown expressive images, the judges would again make judgments as to the intensity of the emotion displayed, but this time they would also complete self-reported measures of emotional regulation. The results suggested that emotional regulation was at least as strong in mediating judgments as cultural norms.

The fact that cultural display norms and one’s own emotional regulation both mediate our perception of others’ emotions has profound implications for anyone attempting to better learn to read people. It is not enough for us to learn other people’s cultures, but we also have to critically reflect on our own norms, both personal and cultural.

This makes the process of emotional recognition just that much harder, which is why Humintell is trying to help by training you in the skill of reading people and understanding cultural differences.

We spend a fair amount of this blog discussing the role of universal emotional expressions, but not everyone agrees.

We spend a fair amount of this blog discussing the role of universal emotional expressions, but not everyone agrees. What is the impact of offering an interviewee a bottle of water?

What is the impact of offering an interviewee a bottle of water?